McLure et al. (2011) developed a code to analyse the ultra‐deep IRAC imaging available in the Hubble Ultra Deep Field (HUDF), based on using HST H‐160 band imaging as prior information.

The basic algorithm is very similar to that employed by TFIT and CONVPHOT. It is written in Fortran, it allows for FFT convolution of high resolution cutouts, and it uses a standard Gauss‐Jordan elimination routine to solve the linear system. The images are fitted as a whole without cells subdivision.

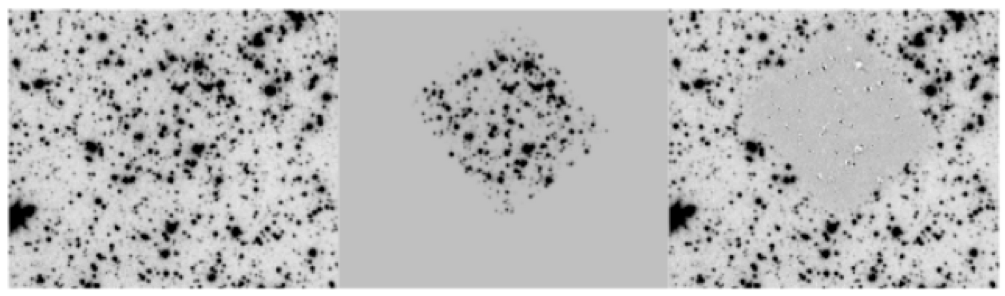

Illustration of the IRAC deconfusion algorithm developed by McLure et al. (2011). The left-‐hand panel shows the inverse-‐variance weighted stack of the epoch1+epoch2 4.5 μm imagingcovering the HUDF. The middle panel shows the best-‐ fitting model of the IRAC data, based on using the H160 WFC3/IR imaging to provide model templates, and a matrix inversion procedure to determine the best-‐fitting template amplitude. The right-‐hand panel shows the model subtracted image (note that the WFC3/IR imaging does not cover the full area of the HUDF).